Dignity

Potential risks to child’s dignity

These questions could be posed as part of your risk assessment practices:

- Does the child have a genuine connection with the brand and do they understand that they are advertising their goods/services?

- Is the content respectful of the child, e.g. does it avoid tricks or pranks that could be viewed as humiliating or upsetting?

- Could the content be artificially manipulated to misrepresent the child in some way, e.g. AI sexualisation of the child?

- When posting content, could information be given away that might make it easier to contact and/or harm the child?

Genuine connection

Adam

An agency has seen Adam's football-related content online and think that his sporty look is ideal for one of their big clients, a fast-food chain.

Adam prefers to eat home-cooked meals and does not like burgers or fried foods. The agency will pay for a reel of him eating chips and an adult-size burger to "provide energy" before a football match, but Adam's Mum knows he is unlikely to enjoy the food.

Flick

Flick's parents have been approached by a company who are promoting their gymnastics leotards and have been provided with some samples for Flick to try on and possibly to wear in her TikToks, with potential earnings of €500 per post.

Flick usually wears 'Ribbon' leotards. She likes them because their branding has a flicking ribbon on each leotard.

Risk management

Brands and agencies will be attracted to child/family accounts with high viewer numbers and engagement, but also where children have a certain look, style or hobby. While this can provide some sorely needed extra income for families and/or provide equipment and support for expensive hobbies/sports, this is word-of-mouth advertising and viewers will forever connect the child to that brand and their identity.

In contrast to traditional forms of advertising, where a child would be playing a role or a character, here the brands are trading on the personality, appearance, and reputation of the child as an authentic person. This means that the child will be viewed as being associated with that brand and what it represents, as a real human being.

Parents consenting to appearances on behalf of the child should consider the brand’s activities and reputation, as well as any potential for stigma that such an association could cause for the child in the future. Additionally, where possible, children should be informed that this is a form of advertising and it should represent a natural brand choice that they might make, if asked.

Respectful content

Bea

Bea's parents usually post about Bea being a troublesome toddler, who is rapidly changing from being a baby to a little girl with her own voice and opinions.

Bea's account name is still listed as BayBea, and her parents regularly use hashtags such as #naughtygirl and #terribletwos within the content.

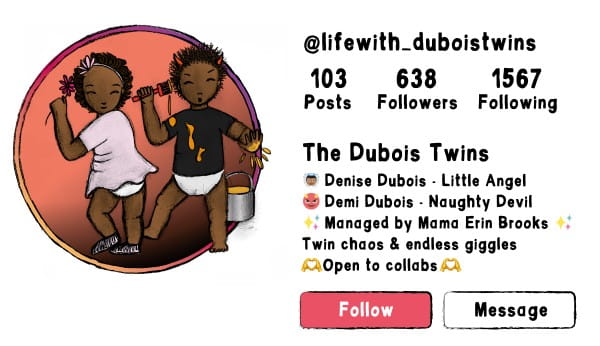

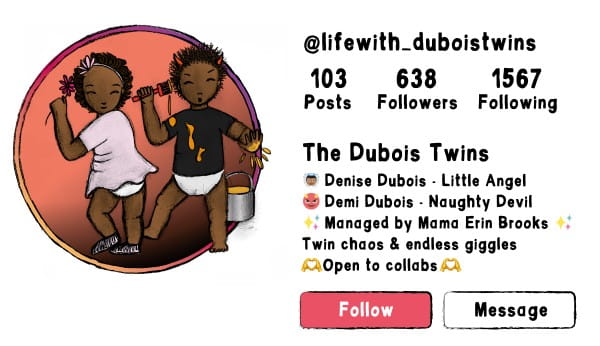

Demi and Denise

Demi & Denise's mother regularly films herself and the girls doing the 'flour face' challenge. In this challenge, parents have to sit in front of the children, with flour in their hands, and answer questions about which child is the kindest, which child is the funniest, and which child is the cutest etc.

Demi regularly seems to be the loser in this game, which means that her mother then blows the flour into her face.

Edi

Edi's father occasionally 'pranks' the girls, sometimes pretending that he has cut himself and is 'bleeding' fake blood, which makes them scream.

On one occasion he mixed sherbert with water and then wiped the foamy mix around their dog's mouth, shouting that the dog had gone wild and was going to attack everyone. The girls got very upset when they saw Prince with a foaming mouth and in distress.

Risk management

Parents who feel isolated may produce content for other parents, rebelling against the glossy imagery of the 'perfect Insta parents' or 'yummy mummy'. This has given rise to the 'slummy mummy' trend, where mothers depict more real and gritty truths about modern parenting. In reality however, this content can depict quite raw moments of parenting and parents can produce content that mocks their child, shows their tantrums, or depicts them in a negative light.

While these types of content appear to have been socially normalised and condoned, the sharing of these intimate private scenes could prove to be embarrassing for the child, as they are being portrayed as troublesome or naughty.

Trends, pranks, and challenges have also become a regular feature of social media content and can be fun things to get involved with as a family, or with friends or peers. While most of this content is intended as fun, there have been extreme consequences to some challenges, even resulting in fatalities, as well as situations where content appears to make fun of or humiliate children as part of the content.

There are also situations where prank or hoax content can result in extreme distress or upset for children, who are not able to understand that this is seen as a joke by the adult.

Take a beat - When planning content and message delivery, consider the issues set out above and how the content depicts the child and the impact it might have on them now or in the future. For pranking or challenge content, ensure that the child is ‘in on the joke’ and treat it as an informed and practised performance for camera, rather than something that might upset or distress the child.

Photo manipulation

Bea

Bea's parents usually post about Bea being a troublesome toddler, who is rapidly changing from being a baby to a little girl with her own voice and opinions. Bea's account name is still listed as BayBea, and her parents regularly use hashtags such as #naughtygirl and #terribletwos within the content.

Bea's account has been live since she was born and there are many posts of her naked in the bath, or in nappies lying in her cot.

Demi and Denise

Demi and Denise's account regularly portrays the girls in devil/angel costumes and/or with horns or wings added as filter effects. Demi is jokingly referred to as a 'naughty devil', whereas Denise is called a 'little angel', and the girls are dressed and staged in that way in a lot of their content.

Risk management

While some of this content might seem cute or playful, there may be dignity issues for the child, or opportunities to attract those with poor intentions to the account activities.

Content fed into social media platforms and/or AI apps, as well as providing valuable data on the child, will provide imagery that can be subjected to capture and/or manipulation. Research evidences that images where children are wearing swimwear or underwear are saved and shared more often than any other type of child-related content. Additionally, Apps like FaceSwap and Nudify can be used to manipulate these images into child sexual abuse material and be shared and exchanged with other users.

Similarly, other sources report that for some female child influencer accounts, 73% of their followers are adult males and that, where adult users have shown an interest in sexualized images of children, the platform algorithms will actively push child influencer content into their feed. This can also be found and magnified through searching for specific hashtags like #babygirl and #daddysgirl.

Consider what information is being shared, to whom and for what purpose, and how the child is being portrayed within both the imagery and any descriptive content or hashtags. Seemingly meaningless and innocent usernames and tags can put a target on certain accounts for those wishing to use the content for illicit purposes.

Similarly, photos of the child less than fully clothed may compromise their dignity and would be best placed within closed family sharing groups, if relating to family beach holidays etc.

Take a beat - When planning content and message delivery, consider the issues set out above and how these could be minimised and/or edited out of the uploaded content before posting.

Identifying content

Adam

At weekends Adam usually has training on Saturday and a match on Sunday, and in his spare time he plays FIFA on his X-box, which his Dad sometimes live streams, showing viewers Adam's online username.

The schedule for Adam's football team is posted on their public Facebook page, along with posts about their social team-building activities and end-of-the-season celebrations. Photos and reels of Adam in his football kit clearly show the team logo and the kit sponsor's company name.

Cole

Cole's parents add to his 'Highlights' school story each year, posing him at the front door in his school uniform and adding comments about his height or hair changes etc.

Although Cole's Mum has blurred the school logo on on his uniform, the colour is distinctive and in earlier posts she has mentioned him going to a local all-boys school.

Flick

Flick's parents are very proud of her gymnastics achievements and have even built a small practice area for her in their basement. The content of her reels often shows pictures of her at her house practicing, and in her room with her trophies and medals, as well as footage from national and international gymnastics events.

The house is in a very expensive part of town and the content clearly shows a lot of high-value gadgets, electrical goods, and jewellery.

Risk management

As explored within the financial section, information that is shared about children can potentially build a digital profile of the child, but it also gives away a lot of information that could make it easier to connect with them virtually or physically. Recent research by the NSPCC identified that 37% of children who had been groomed stated that Instagram was the source of their grooming.

While parents would hopefully be in control of the management of child’s accounts and message services, they are responsible for making sure that the content does not provide information or access to those wishing to harm their children.

Content that gives away information about a child’s gaming identity could allow others to contact them through those platforms, while content giving away geographical information could allow people to contact the child in person. This includes information about the home, school, sports clubs, or external events that the child might be attending.

Consider what information is being shared, to whom and for what purpose, and what identifiable data about the child is being disclosed. Is it possible to remove/limit these details in order to decrease the risk of online or physical contact with the child?

Take a beat - When planning content and message delivery, consider the issues set out above and how these could be minimised and/or edited out of the uploaded content before posting.

© Copyright University of Essex 2025

Health and safety