GCHQ’s Ethical Approach to AI: An Initial Human Rights-Based Response

This post originally appeared on about:intel on 5 March 2021.

The UK’s intelligence and security agency (GCHQ) recently released their new AI and Data Ethics Framework, that will guide the agency’s future use of AI. This step towards public engagement and transparency is laudable, but the report’s content raises concerns. Namely, it proposes a vague ethical approach rather than a concrete one based on human rights. Moreover, it raises questions as to how GCHQ approaches the “necessary in a democratic society” test used to evaluate the lawfulness of certain measures – especially critical in the field of intelligence.

On 25 February, GCHQ released “Pioneering a New National Security: The Ethics of Artificial Intelligence”, in which they set out — in broad terms — the AI and Data Ethics Framework that will guide the agency’s future use of AI. This is a hugely significant document for what it represents: a deliberate move towards public engagement, transparency, and the establishment of public trust. GCHQ notes that the publication of this framework “is the first step of a much longer journey: we’d like you to join us on it”. In that spirit we would like to use this article to highlight two human rights-based concerns arising from an initial reading of the paper. First, the apparent prioritisation of an ethical approach over an explicit human rights-based approach and, second, how the "necessary in a democratic society test" is approached.

The benefits of a human rights-based approach

The report quotes the Alan Turing Institute in noting that “the field of AI ethics emerged from the need to address the individual and societal harms AI systems might cause”, and the framework developed by GCHQ draws heavily on the Institute’s landmark research in this area. The difficulty with an ethics-based approach, however, is that while ethics can make a significant contribution to how we think about AI, and how we grapple with the ‘big issues’, ethics is not – and is not intended to be – a governance framework (see here, e.g,. on “ethics as an escape from regulation”). It does not, for example, establish shared understandings of harm, or establish mechanisms through which that harm may be identified and addressed. The absence of consensual understandings, and the absence of an agreed vocabulary to address the implications of AI, thus provides space for normative and value-laden beliefs to become encoded within ethical standards. This issue may be illustrated by reference to two components of the GCHQ report:

- in establishing “the major ethical challenges”, fairness is referred to as “that sense of equity and reasonableness we all know when we experience it, but probably would find difficult to perfectly define”. It is noted that best practice in managing the risks posed by AI involves, among other elements, “documenting the characteristics of what ‘fairness’ might look like”.

- , “questionable technical design” and “unintended negative consequences” are highlighted as two of the common sources of problems found around AI systems. These are defined, respectively, as “technical risks related to bias and safety not being fully resolved by designers” and “the potential for systems to cause harm to individuals or communities not being foreseen or tackled during development”.

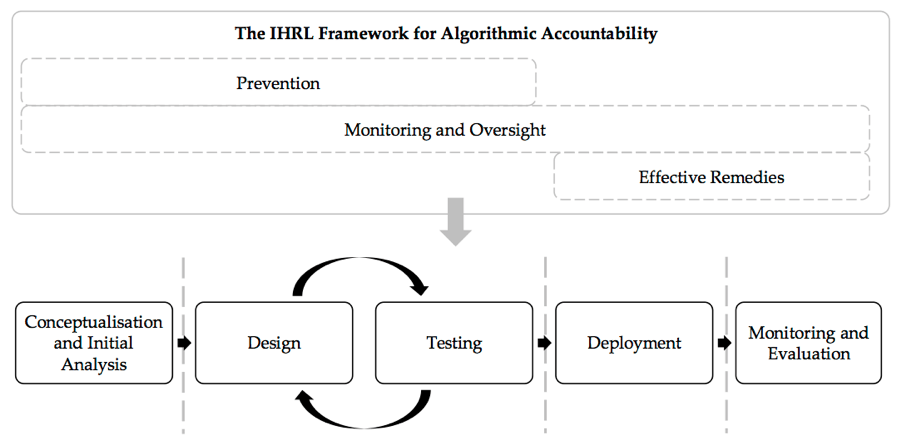

Human rights law, on the other hand, is explicitly designed to identify and protect against ‘individual and societal harms,’ and it provides an organising framework with which to approach the design, development, and deployment of AI. McGregor, Murray, and Ng have discussed this framework in detail, and two key benefits identified in their work may be highlighted here. First, human rights law provides a means both to define and assess harm. It establishes a number of substantive rights, extending across all areas of life, and benefitting from near universal recognition; the content of these rights have been elaborated in detail through decades of case law and soft law; and human rights law also establishes mechanisms to determine whether a particular activity constitutes a lawful interference with a human rights protection, or an unlawful violation.

Second, human rights law imposes specific obligations on States (and expectations on businesses) to protect human rights, and sets out the mechanisms and processes to give effect to these obligations. Importantly, human rights law applies at all stages of the AI lifecycle, from design and development through to deployment. It is therefore capable of both guiding processes and decision-making, and providing a route to remedy and accountability.

Figure 1 Source: Human Rights, Big Data and Technology Project

The added value of human rights law over ethics as an organising framework may be demonstrated by reference to the two examples excerpted below:

Rather than attempting to define ‘fairness’, or to document its characteristics in different contexts, human rights law offers an alternative, more concrete, approach. It establishes processes to determine (a) what rights are brought into play by a particular AI tool or deployment (these will vary, depending on the context, and a number of rights may be affected, i.e. an operation may bring into play both the right to privacy, and the right to life) and (b) whether those rights are violated, or not. In essence, a human rights-based approach facilitates a move from the abstract to the concrete.

Equally, an effective and appropriately deep human rights impact assessment will help to identify and address technical risks and unintended consequences. Human rights impact assessments are directed towards identifying the potential impact on human rights (whether direct, or indirect) associated with a particular tool or deployment. They incorporate the full set of substantive and procedural rights, and are therefore a key means by which overall potential utility and harm can be identified and addressed, whether through mitigation or a decision not to deploy. Interestingly, in an example of parallel UK state surveillance activities, the Surveillance Camera Commissioner has called for the development of an ‘integrated impact assessment’ for use in relation to police live facial recognition deployments.

In proposing a human rights approach, two important issues must be noted. First, we do not suggest that a human rights-based approach should exclude ethical considerations, that would be clearly inappropriate. Rather, human rights law can be used as an organising framework, capable of incorporating other approaches, whether ethical, technical, or otherwise. Second, it is not suggested that human rights law has all the answers. AI represents a step change in surveillance and decision-making capabilities, and much new thinking will be required. But human rights law does offer a concrete and consistent basis from which to approach these challenges, while building on the UK’s existing legal obligations. We think there is definite merit in developing this approach in the context of intelligence agency activities.

The “necessary in a democratic society” test

GCHQ appropriately highlights the importance of the “necessary in a democratic society” test established by human rights law as a means of evaluating the lawfulness of certain measures. The report states that:

“GCHQ undertakes an assessment of the necessity and proportionality of any intrusion into privacy both when considering the use of operational data to train and test AI software, and when applying the software to the analysis of operational datasets. The assessment of necessity and proportionality is already integral to the activity our people undertake:

Necessity. This requires the analyst to ensure that the activity is necessary to meet a legally valid intelligence requirement which falls under one of the Intelligence Services Act 1994, and there is no reasonable prospect of obtaining the wanted information through other means.

Proportionality. Ensuring proportionality requires the maintenance of a justifiable case-by-case balance between the intrusiveness of what is planned and the value that would be derived, whilst representing the minimum interference necessary to achieve it”.

Although there is no detail on the specifics of this approach (other than that it is subject to review by the Investigatory Powers Commissioner’s Office (IPCO)) a few concerns — or opportunities for further engagement — can be flagged. This is an area where more detail, at least on how overarching questions are approached, would be hugely beneficial in terms of establishing trust vis-à-vis how GCHQ works.

The “necessary in a democratic society” test is intended to ensure the overall rights compliance of any measure. It addresses the “competing interests” arising in particular contexts. For example, a particular AI-enabled tool may be useful in identifying individuals involved with a terrorist organisation (pursuing the legitimate aim of ensuring national security), but may pose risks to privacy, including of individual stigmatisation, and other rights. These are the “competing interests” at play. In resolving these interests, the state must identify both the potential utility and the potential harm of any deployment, in light of the constraints of a democratic society.

In practice, applying the “necessary in a democratic society” test is notoriously difficult: the content of human rights law provisions have been primarily developed though ex post accountability processes, and translating these findings so that they may effectively inform ex ante processes is far from straightforward (read the detailed discussion).

As such, questions arise as to how GCHQ approaches this test. In terms of demonstrating the utility of a particular measure, for example, are assessments made at a sufficiently granular level, on a case-by-case basis, supported by a sufficiently robust evidence base? In terms of potential harm, is a full rights impact assessment conducted? It is of concern, for instance, that the report focuses primarily on the right to privacy — given the impact that AI enabled surveillance and analysis can have on the broader spectrum of democratic rights — and frames discrimination/bias primarily as an ethical, rather than a human rights, issue.

Another concern is that the necessity test may, in practice, be applied narrowly, essentially as “necessary to achieve the legitimate aim pursued” (this has been documented in the context of law enforcement surveillance). The statement on page 31 of the report, that satisfying the necessity test involves an analyst ensuring that “there is no reasonable prospect of obtaining the wanted information through other means” hints at this (inappropriately) narrow interpretation. It is entirely possible that a particular AI tool or deployment may be the only way of obtaining a particular piece of information, but that deploying that tool (in that context) would not remain faithful to democratic principles, and so could not be considered necessary. The test is not one of necessity per se, but whether the measure is “necessary in a democratic society”. The broader democratic principles mentioned above must be taken into account.

Conclusion

These concerns are raised in a context of positive engagement. We do not claim that human rights has all the answers, or that we have ready to implement solutions to the issues raised. We do believe, however, that human rights offers an appropriate organising framework through which to address GCHQ’s engagement with AI, and that the concerns raised in this post are worthy of further consideration.

In the past we have tackled issues such as these by organising a series of workshops in collaboration with IPCO, and we believe that similar work focused on AI and human rights impact assessments, for example, could offer significant added value. What is clear is that the issues surrounding how AI is deployed in an intelligence agency context are complex and can only benefit from further engagement, research, critique and challenge, from a variety of different points of view.